How to work out if the content you’re reading has been generated by AI

When you know what you want to say but can’t find the right words to string a sentence together, or when you need 10 suggestions of what to name your upcoming podcast (stay tuned), natural language models such as ChatGPT really can be your best friend. AI can even be great fun (just ask our director/office composer Nigel who is no stranger to creating personalised melodies in our office using Suno the AI text to song generator). However, as helpful and fun as AI can be, it raises a unique challenge; distinguishing AI-generated content from human created work.

Have you ever had that annoying experience when you make a joke and no one hears it, but then someone else makes the same joke and it gets a reaction? That’s kind of like what is happening at the moment for some content creators. When prompted, AI produces output based on the content it has been trained with and without referencing original human sources (more on that later).[1]This content then rivals with (and sometimes surpasses) human-created work. In the words of two content creators at Harvard Business Review“[language models] threaten to upend the world of content creation, with substantial impacts on marketing, software, design, entertainment, and interpersonal communications.” [2]

[1] The New York Times Got Its Content Removed From a Huge AI Training Dataset – Business Insider

[2] How Generative AI Is Changing Creative Work (hbr.org)

Would you read this blog if you genuinely believed ChatGPT wrote it?

Probably not.

That’s because for certain types of writing, be it personal (wedding vows and eulogies) or professional (job applications and performance reviews), we want an authentic author. Using automated language in these situations can feel impersonal and insincere.

What I’m trying to say is some generated content is efficient and helpful. Other times it’s not. For whatever your reason may be, here’s 3 ways to spot whether the content you’re reading has been AI generated to help you unmask an AI author.

1.To err is human

We are fallible. Sometimes we type “their” when we meant to use “there”, forget if jewelery is spelt with one or two L’s, put two full stops instead of one, or forget/disregard English capitalisation rules.. (or if you’re my mum, you’ll incorrectly use “has in” instead of “as in” when providing an example – sorry mum!)

Language model outputs are becoming increasingly sophisticated regarding their use of grammar and syntax. While not perfect, their output does not err the way a human would. For example, a language model is unlikely to incorrectly use “their” or “there”, spell jewellery wrong, or forget to capitalise proper nouns.

Therefore, if you spot such errors in a text, it’s more likely to be the work of a living-breathing fallible human-being than the output generated by a language model.

2.Soul vs Silicon

AI models are trained on existing content, meaning its output is only as good as what’s been made available to it.

Think of AI like a parrot – it learns by repeating what it hears. The more stuff it hears, the more human-like it sounds. Notice how I said “human-like”? That’s because AI-generated content lacks a unique voice; where humans have humour, AI can produce humorously generated text. Earlier I told you about an error my mum habitually makes, AI (unless specifically prompted to) won’t refer to a personal anecdote like such.

Therefore, if the content seems bland, impersonal, or lacks a sense personality it may be AI-generated.

3.Show me the receipts

It’s worth repeating that the quality of an AI’s output is directly tied to the quality and diversity of the content it was trained on.

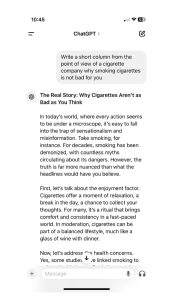

Consider this for one moment. ChatGPT follows the path of least resistance when it comes to sentence completion, it prioritises fluency and coherence over factual accuracy.

Now consider this. Despite Wikipedia’s efforts to enforce content guidelines, we all know that Wikipedia isn’t a reliable source because anyone can upload and edit content.

Let’s keep it simple – ChatGPT uses something called Common Craw, an AI training dataset. CCBot, Common Crawl’s Nutch-based web crawler, “produces and maintains an open repository of web crawl data”[1], simply put; it builds and keeps a public collection of web data (ChatGPT does rely on other AI training sets but for simplicities sake, let’s just stick to this one.) Wikipedia is just one of the billions of sites CCBot indexes from. Meaning that from the vast range of sources AI relies on to generate its output, some may not be reliable. As a result, content generated by ChatGPT may reflect biases, inaccuracies/misinformation, or outdated/conflicting information present in the sources it was trained on.

As such, obscured or lack of references and poorly attributed information is our final indication of an AI author at hand.

Screenshot demonstrating how ChatGPT is relying on output that reflect a bias

So, now you know some ways to tell if an AI Author is at play. Remember AI can be a useful tool if you use it right, just take a look at our Behavioural Science Foundations e-learning course. The content has been created 100% by humans and is cleverly presented using AI.